VirtualMetric DataStream

VirtualMetric DataStream is a comprehensive automation engine that revolutionizes security data processing and routing across multiple platforms and destinations. Built for modern security operations, DataStream streamlines the collection, transformation, and distribution of security telemetry data from diverse sources to leading SIEM platforms, data lakes, and security analytics solutions.

Universal Security Data Processing

DataStream serves as the central nervous system for your security infrastructure, automatically discovering, processing, and routing telemetry data with unprecedented flexibility and intelligence. Our platform transforms raw security logs into meaningful, standardized formats that integrate seamlessly with your existing security ecosystem across multiple platforms and schemas.

Multi-Schema Support

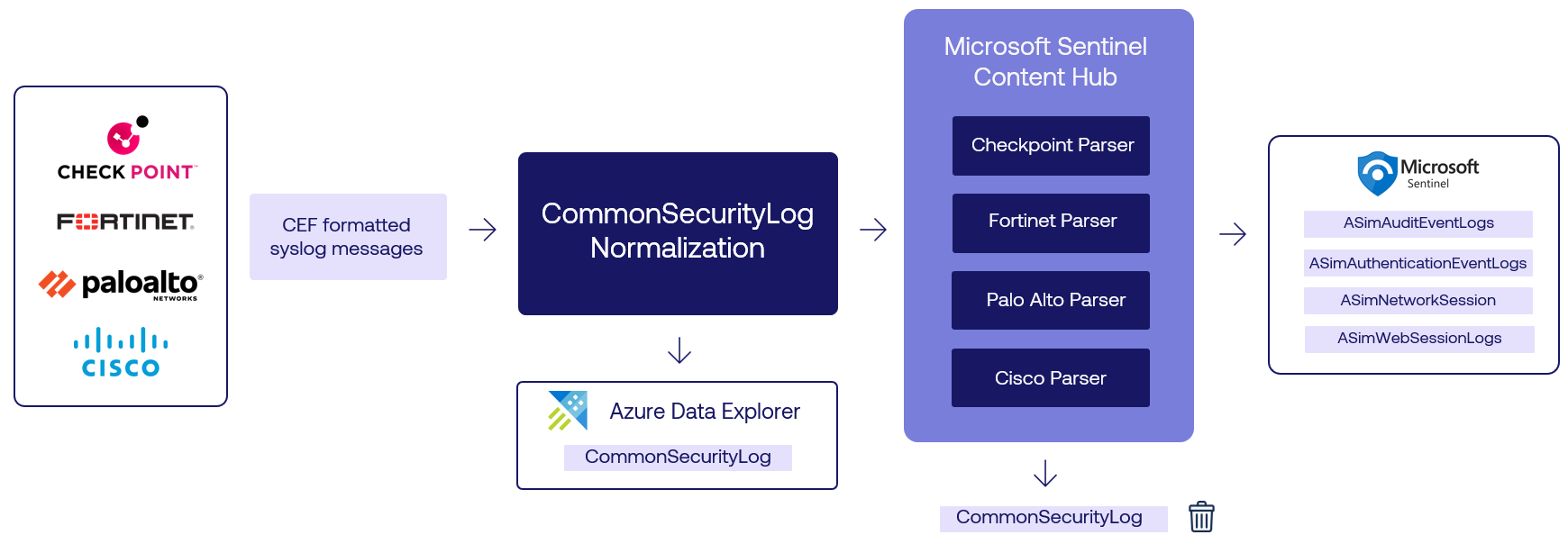

DataStream natively supports industry-standard security schemas, enabling seamless integration across diverse security platforms:

- ASIM (Advanced Security Information Model) - Microsoft Sentinel's unified data model for comprehensive threat detection

- OCSF (Open Cybersecurity Schema Framework) - AWS Security Lake and multi-vendor security environments

- ECS (Elastic Common Schema) - Elasticsearch, Elastic Security, and the complete Elastic Stack ecosystem

- CIM (Common Information Model) - Splunk Enterprise Security and SOAR platforms

- UDM (Unified Data Model) - Google SecOps and Chronicle SIEM

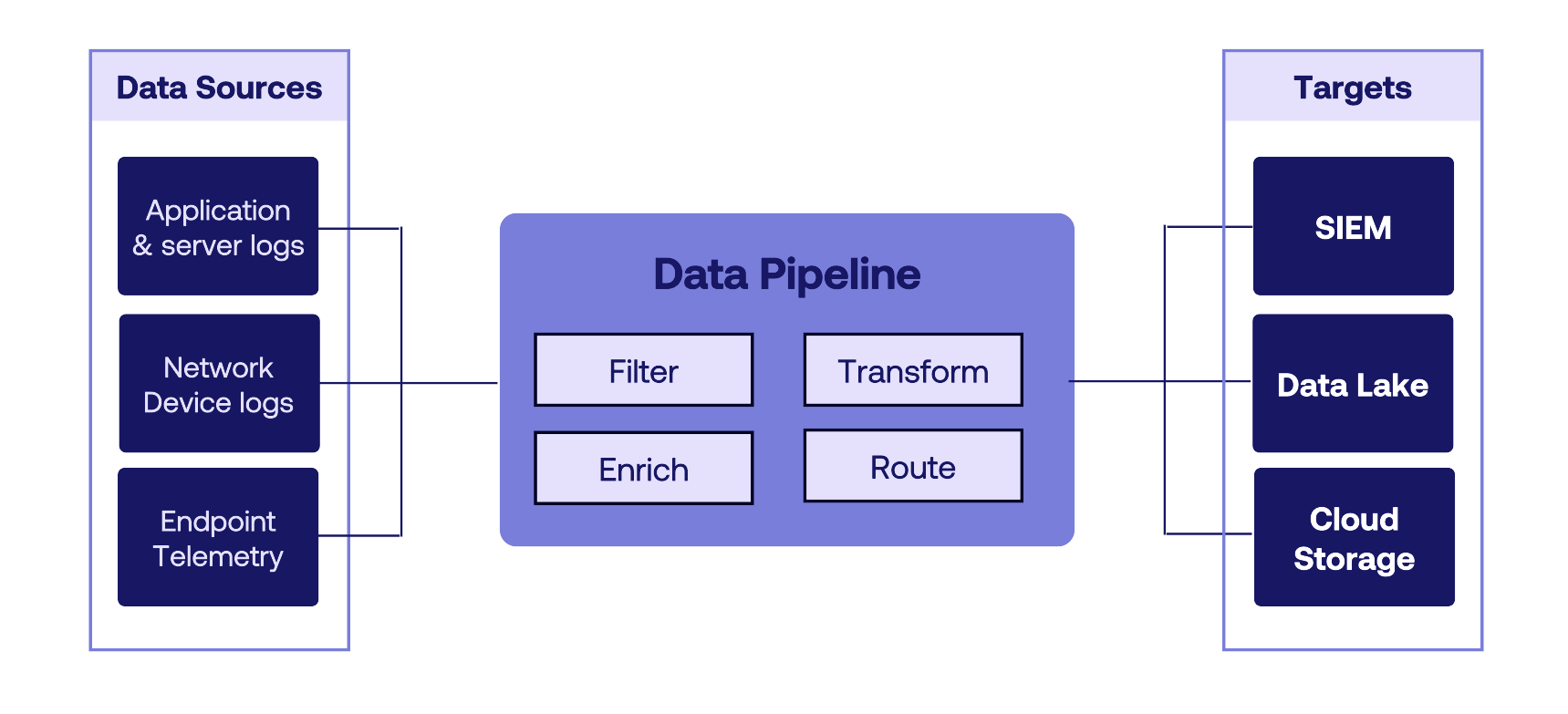

Telemetry Pipelines

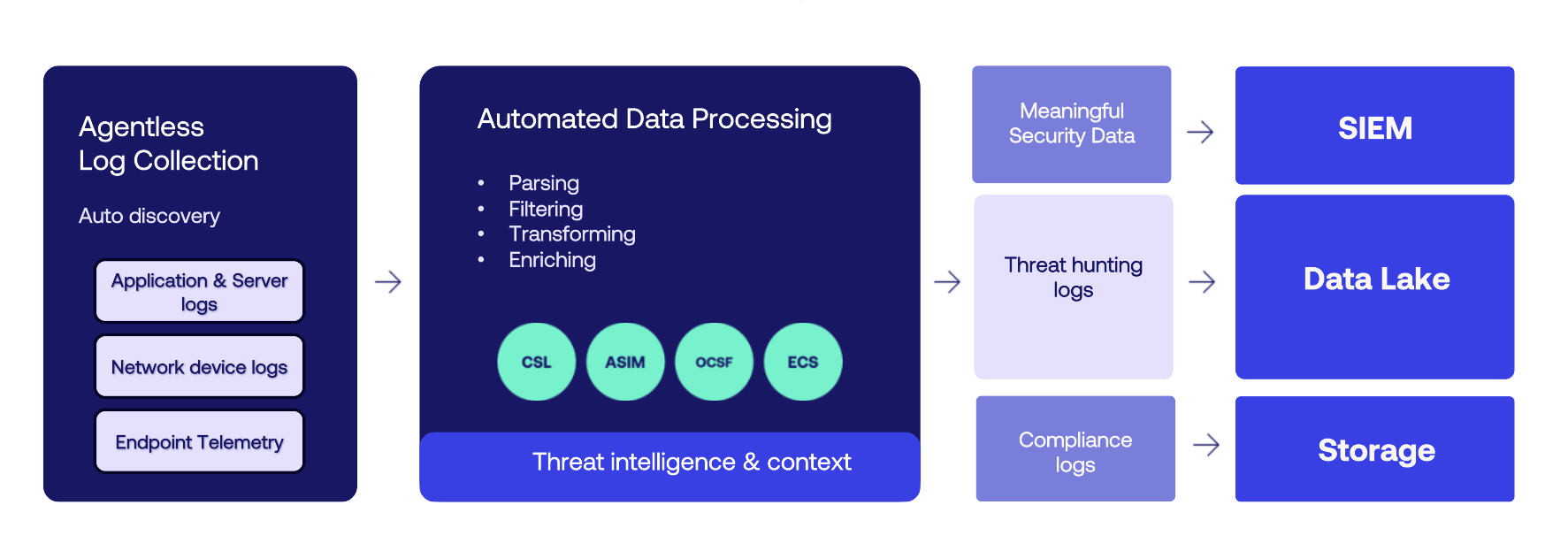

A telemetry pipeline is a comprehensive end-to-end system that manages the entire journey of log data from source to destination, handling collection, processing, and routing to various endpoints. It is responsible for ensuring that the right information reaches the right destination at the right time.

Users can design the pipeline so as to route the data based on data type, source, or other criteria. Each piece of information that enters the pipeline undergoes several crucial transformations.

Data Collection

The pipeline first gathers raw data from various sources through agentless log collection with automated discovery:

- Application & Server Logs - Comprehensive application monitoring and server-side event collection

- Network Device Logs - Routers, firewalls, switches, and network appliance telemetry

- Endpoint Telemetry - Workstation, server, and mobile device security events

- Cloud Services & Infrastructure - Multi-cloud security events and infrastructure monitoring

Data Processing

The pipeline processes data through sophisticated automation stages including parsing and filtering, transforming and enriching, threat intelligence integration, and context enhancement. Our advanced processing engine delivers:

- Parsing & Normalization - Intelligent field extraction and data standardization across multiple schemas

- Filtering & Enrichment - Context-aware event filtering with real-time threat intelligence integration

- Schema Transformation - Seamless conversion between ASIM, OCSF, ECS, and CIM formats

- Validation & Quality Assurance - Data integrity checks and completeness validation

Data Routing

The processed data is intelligently directed to appropriate destinations based on security requirements, cost considerations, and functionality needs. DataStream supports flexible routing to:

- SIEM Platforms - Microsoft Sentinel, Elasticsearch/Elastic Security, Splunk Enterprise Security with optimized ingestion

- Data Lakes - AWS Security Lake, Azure Data Lake with automated partitioning

- Storage Solutions - Cost-effective long-term retention in Azure Blob, AWS S3

- Analytics Platforms - Splunk, custom APIs, and real-time streaming endpoints

Enterprise Challenges

In enterprise environments, pipelines are essential due to the need to handle massive volumes of data from diverse security tools and platforms. Organizations face complex challenges including managing multiple data formats and schemas, ensuring consistent processing across different security platforms, maintaining real-time threat detection capabilities, and optimizing costs while preserving security coverage.

The data must be directed to the appropriate destinations for security monitoring, threat detection, compliance, and analysis efficiently and accurately, enabling real-time flow management, with consistent processing and formatting, maintaining data integrity throughout the journey, and enabling sophisticated routing decisions across multiple platforms.

DataStream's intelligent routing capabilities enable organizations to direct different types of log data to the most appropriate platforms and Azure services based on their security, operational, and compliance requirements:

Meaningful Security Data

Critical security events require real-time monitoring and immediate alerting across multiple platforms. DataStream intelligently routes these logs to Microsoft Sentinel for ASIM-based threat detection, AWS Security Lake for OCSF compliance, Elasticsearch for ECS-formatted security analytics, and Splunk Enterprise Security for CIM-compliant data ingestion.

Threat Hunting Logs

Historical security data essential for threat hunting and incident investigation is efficiently routed to Azure Data Explorer and Data Lakes for long-term analysis, advanced querying, and cross-platform correlation.

Compliance Logs

Regulatory compliance data requiring secure long-term retention is automatically routed to Azure Blob Storage and AWS S3 for cost-effective storage, comprehensive audit trails, and automated compliance reporting.

This unified approach provides several enterprise benefits including cost optimization through intelligent routing, improved query performance across multiple platforms, flexible retention policies, comprehensive multi-platform security coverage, and simplified management of complex security data workflows.